The Privacy Misconception in AI Services

Nowadays, it feels like everyone at work has an imaginary friend, an incredibly smart one that helps us write code, polish emails, and talk through tough decisions. We treat this friend like a vault, dumping our secrets and sensitive data into the chat box because our "Enterprise Subscription" makes us feel safe. We see the "not used for training" label and think we're in a private bubble.

But here’s the reality check: We’ve mistaken a sense of privacy for actual confidentiality. These AI platforms aren’t just fleeting chats. They are massive, centralized databases. "Not used for training" doesn't mean your data isn't sitting on a server somewhere, indexed and accessible to admins. Those "secrets" you told your imaginary friend? They’re logged, stored, and definitely not as confidential as you think.

In this blog, I want to focus on ChatGPT. I’m going to pull back the curtain on why our “private” conversations aren't as safe as we think and show you exactly how easy it is for an attacker to turn these chats against us.

So… why start with ChatGPT?

Clearly, the main reason was that it’s the most popular AI platform in the enterprise. But more importantly, as a security researcher, I had a unique opportunity to see what’s actually happening inside real enterprise environments, across multiple customers. I’ve been digging into how people really use the tool, and I can sum up our findings in three letters: TMI. Users are essentially using the chat box as a dumping ground for, well, everything. Our scan of mid-size and large organizations revealed a total treasure trove: dozens of active API keys, leaked tokens, private code snippets, and enough PII to make you rich if you sold it on the Darknet.

The OpenAI Compliance API: A Double-Edged Sword

The OpenAI Compliance API is an API designed to help organizations maintain oversight and meet regulatory requirements. It's only accessible to enterprise workspace administrators, making it seem like a controlled, secure feature.

Key capabilities include:

- Retrieving conversation history across the entire workspace

- Accessing shared files and uploaded documents

- Extracting code snippets and data shared in chats

- Monitoring user activity and content

These capabilities serve legitimate purposes: compliance monitoring, data retention policies, security audits, and regulatory requirements. The OpenAI Compliance API is working exactly as designed.

The challenge isn't a flaw in the Compliance API. It's the inherent nature of centralized data access. When compliance and oversight require full visibility, that same visibility can be exploited if access controls fail. While this is valuable for compliance and governance, it also creates a significant security risk: anyone with access to the Compliance API key can read everything shared in your workspace. Everything means chats, shared files, code snippets, memory, and so much more, including secrets.

The permission model is broad, and there's limited granular scoping. If you have the Compliance API key, you essentially have access to the entire treasure trove of workspace data.

Last month, here at Token Security, we released an open-source tool designed to give you deep visibility into your Custom GPTs. By leveraging the OpenAI Compliance API and all its endpoints, our GCI tool provides the transparency you need. Check it out here.

Now, we’ve got enough info to talk about the scenario where that API key falls into the wrong hands, and we accidentally find ourselves vulnerable to an attack.

Attack Scenario: When the Keys Fall into the Wrong Hands

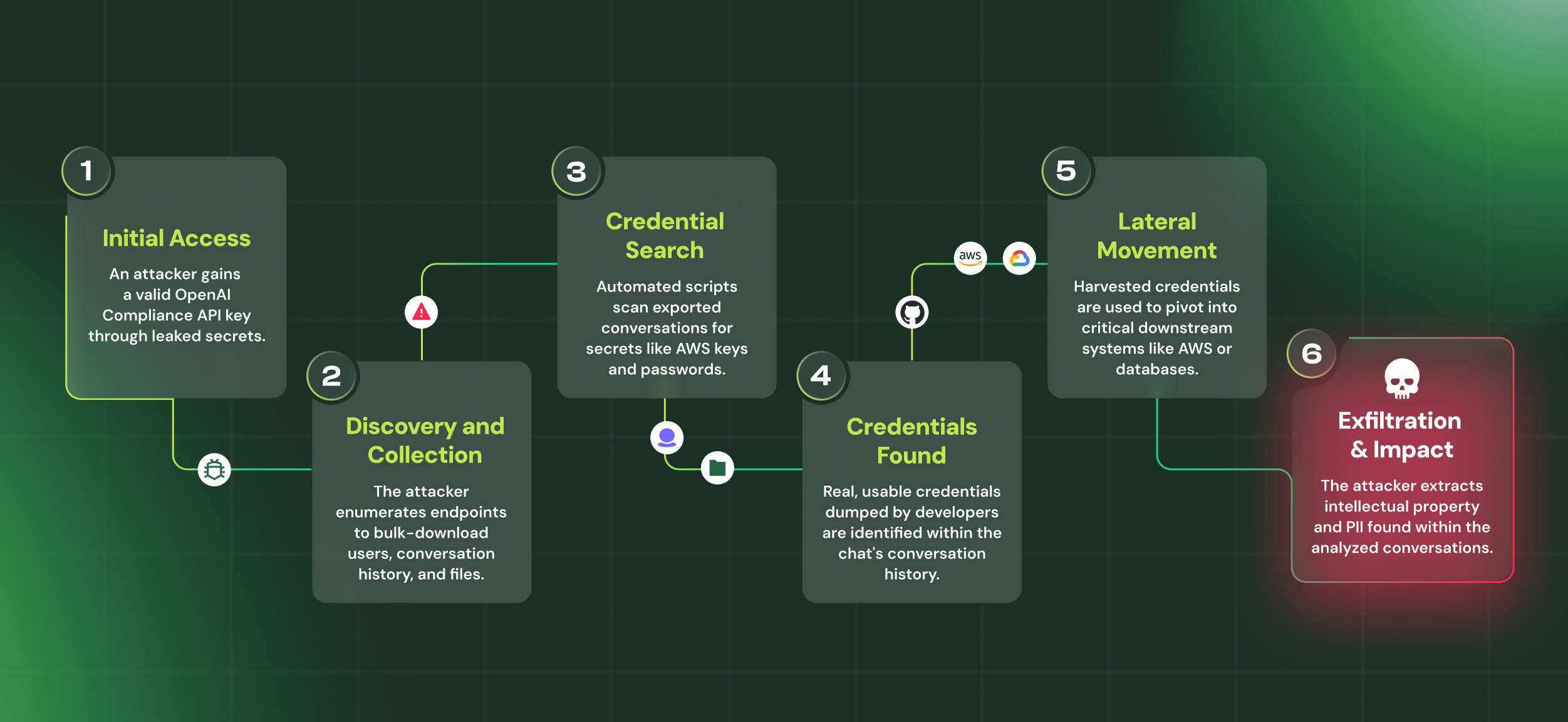

Let's walk through a realistic attack scenario:

- Initial Access - The attacker gains possession of an OpenAI Compliance API key whether through a leaked GitHub secret, stolen admin credentials, an insider threat, or many other possible vectors.

- Discovery and Compliance API Enumeration - The attacker uses valid Compliance API endpoints to enumerate the workspace and retrieve sensitive data:

GET /compliance/workspaces/{workspace_id}/users→ lists all users, roles, and emailsGET /compliance/workspaces/{workspace_id}/conversations→ retrieves all conversations, messages, metadata, files, and tool callsGET /compliance/workspaces/{workspace_id}/users/{user_id}/files/{file_id}→ downloads user-uploaded or conversation-generated files

- Automated Secret Scanning - The attacker scans the exported conversations and files for sensitive information, extracting:

- AWS keys, DB connection strings

- API tokens, SSH keys, passwords

- Internal URLs and service endpoints

- Call stacks/Code snippets/Architecture of software

- Lateral Movement - The attacker uses the harvested credentials to pivot into critical systems:

- AWS: access S3/EC2/RDS

- GitHub: clone or modify private repositories

- Databases: extract customer, financial, operational data, and more

- IdP: create backdoor accounts or escalate privileges

- Intelligence Gathering - The attacker analyzes conversations and files to collect company IP, customer data, employee PII, strategic plans, and information about the organization's security posture

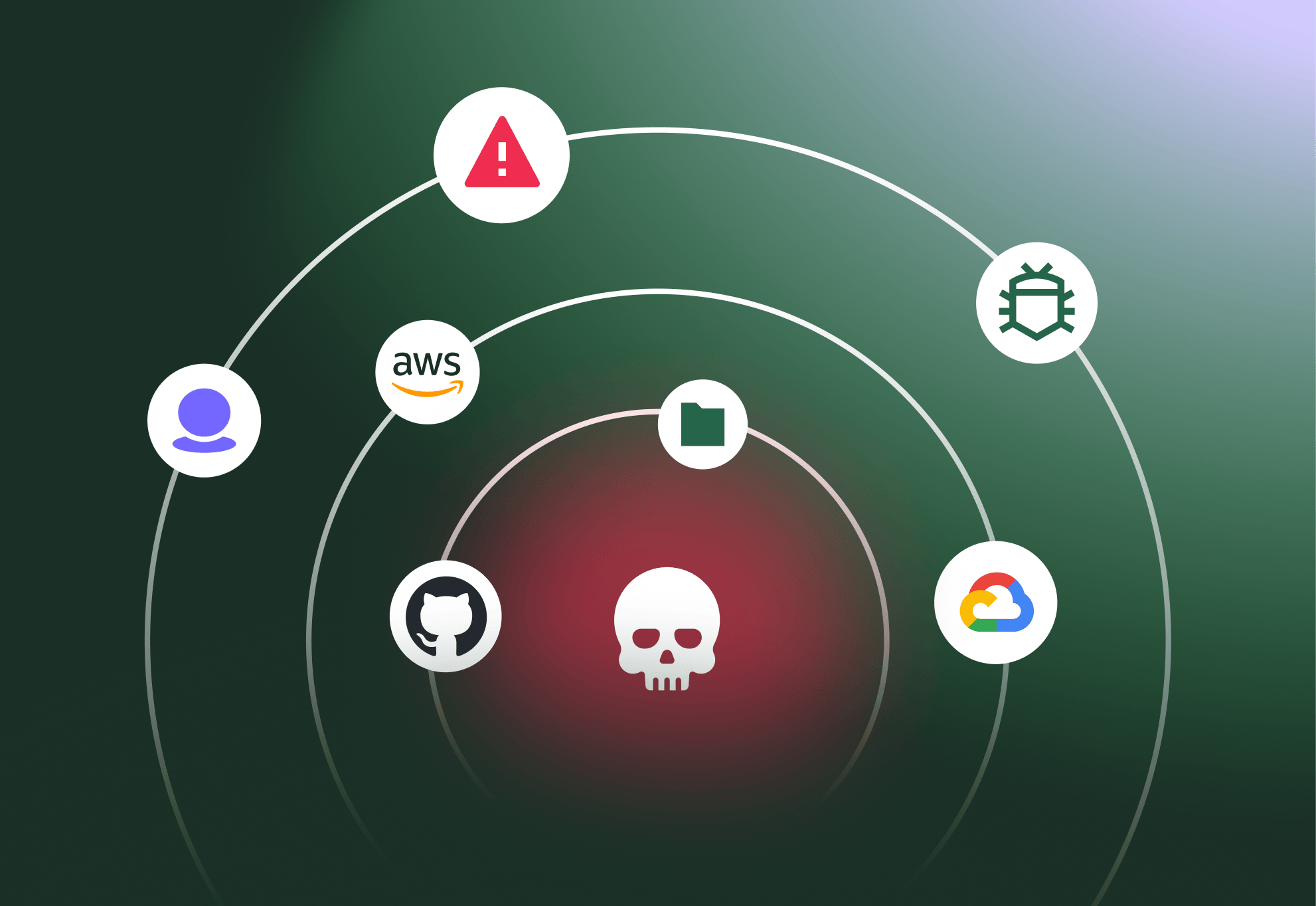

Impact of a Successful Attack

A successful attack can cause significant organizational damage, including:

- Exfiltration of code, secrets, and customer data

- Persistent access across infrastructure

- Privilege escalation and potential supply-chain compromise

- Business espionage and long-term strategic loss

- Reputation damage and financial loss

****The real twist is that none of this requires a genius-level hack. It’s just a case of valid access meeting a very common habit, oversharing with your AI services while you’re just trying to get work done.

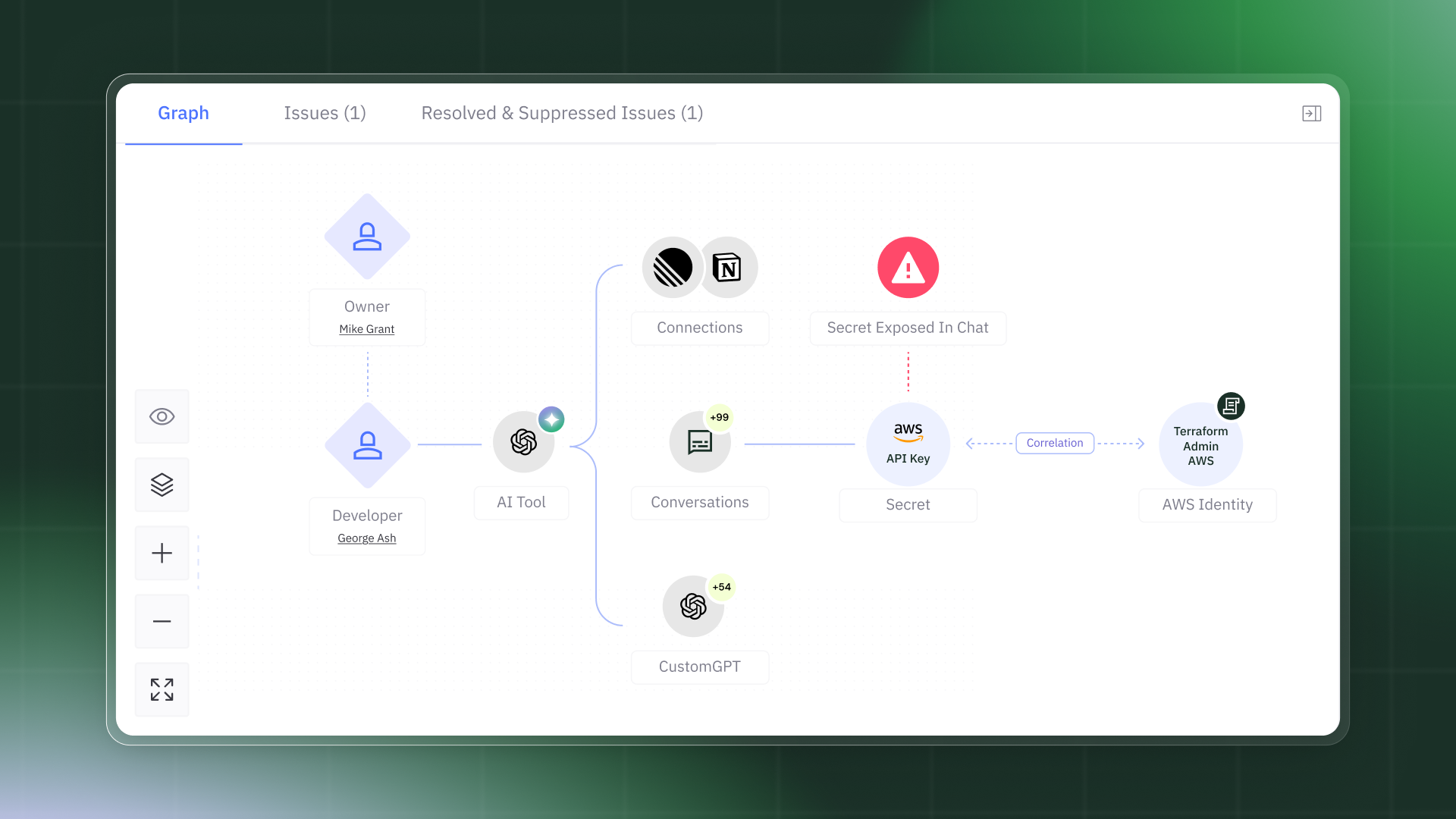

In this use case, Token would have enabled you to recognize this threat ahead of time. Token improves the security posture of your AI services by identifying threats and bad practices, ultimately preventing attacks like the one described above.

Should We Trust It Blindly?

Here's the uncomfortable truth: the information written in chats isn't shared with OpenAI for training, but it is saved and accessible through its Compliance API. If an attacker compromises OpenAI servers, they gain access to all of your data (because it is stored there, contrary to common belief, which suggests the data isn’t saved due to the license type). After seeing major companies suffer breaches, this scenario is not far-fetched.

What does this type of breach mean for you?

- Infrastructure Risk: A malicious actor with API access can discover credentials or and gain access to your entire infrastructure.

- IP Theft: Your company's intellectual property, strategic discussions, and confidential plans are sitting in a searchable database and can be leaked easily.

- Privacy Exposure: Sensitive personal information about your employees is exposed - not just work details, but personal conversations, health information, even which cake recipe you chose for your anniversary can be sold on the Darknet, making an attacker very rich.

The enterprise license provides protection against OpenAI using your data, but it doesn't protect you from authorized access being misused or unauthorized access through compromised credentials.

Recommendations: Treating AI Workspaces Like What They Are

- Assume persistence: Remember that chat history is another location where company IP can be exposed. Treat it like you would email or Slack which are permanent and potentially discoverable.

- Never share raw secrets: Use variables, placeholders, and password manager mechanisms. Instead of pasting API_KEY=sk-abc123..., reference it as API_KEY=$SECURE_KEY and retrieve it from your secrets manager.

- Implement least privilege: Limit who has access to these administrative API keys. Rotate them regularly. Monitor their usage.

- Educate your team: Make sure developers and employees understand that "enterprise" doesn't mean "private." Data that is indexed can be accessed.

- Separate personal from professional: Consult on personal matters using your personal email, not the corporate workspace. That health concern or family issue doesn't need to be in your company's searchable chat history. You also don't want your manager retrieving "memory" about you through the administrative API and discovering that you're actively job searching or planning to leave the company.

Visibility, control, and governance is essential to ensure a secure AI environment. To learn how Token Security can help with identity-first approach to AI agent security, request a demo of our solution today.

.gif)